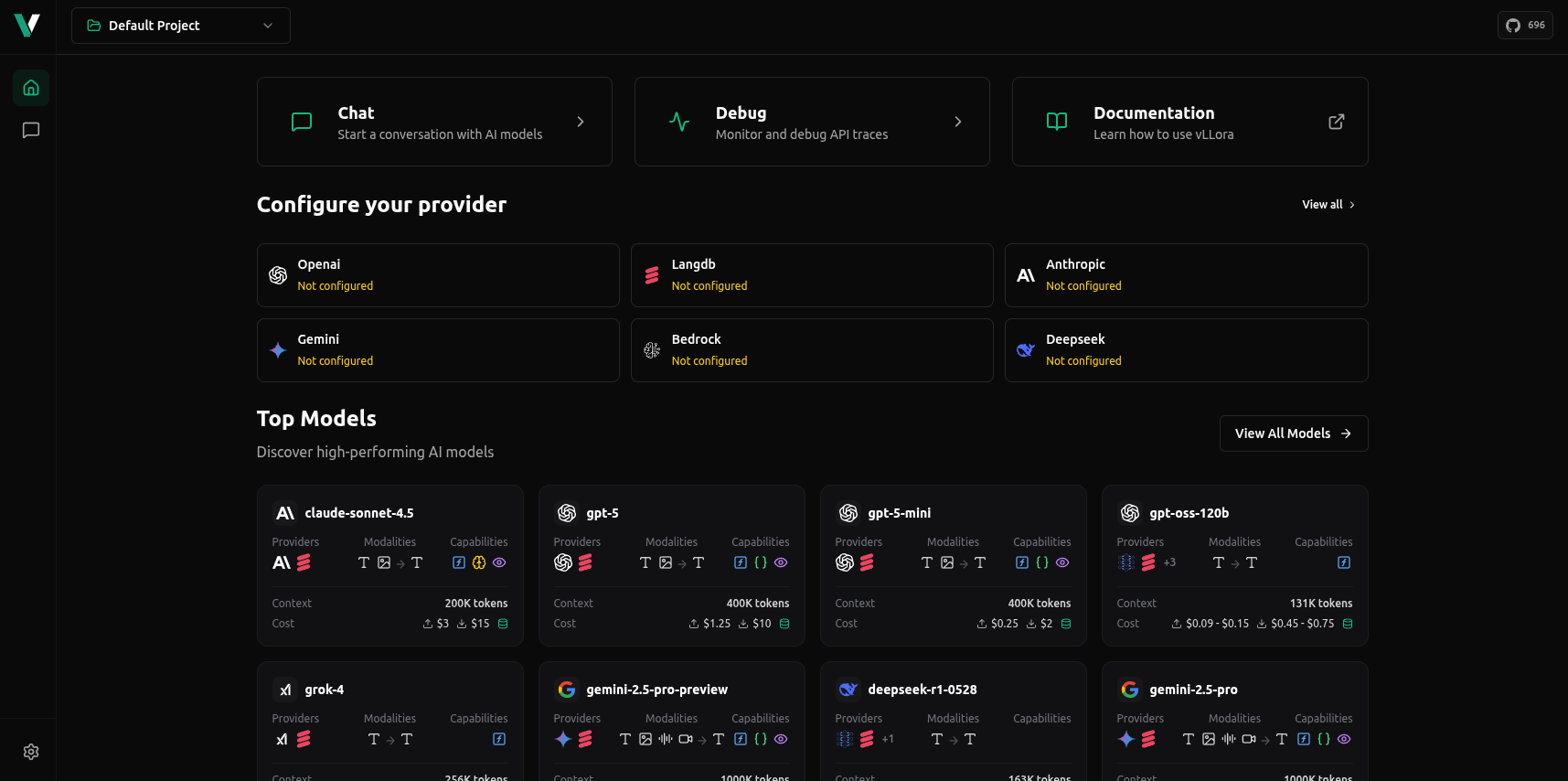

Using vLLora to debug AI agents

Building agents takes many cycles of tweaking prompts, running evaluations, and figuring out what went wrong. vLLora lets you see traces in real time so you can debug, monitor, and optimize faster. It’s fair-code runs locally, and stores data in SQLite for fine-tuning later. Just swap out your OpenAI URL with your local vLLora endpoint, everything else works the same.

Observe your agent in real time

Step 1: Setup vLLora

- Download and install vLLora using Homebrew:

brew tap vllora/vllora

brew install vllora

vllora

- Setup your provider

- Change your OpenAI base URL to vLLora which is

http://localhost:9090/v1

Step 2: Send a request

You can now send a request to vLLora using the chat section or by using the curl command.

Step 3: Debug your agent

Once your request runs through vLLora, you can debug your agent by going over the traces. You could see the exact request sent to the model, the response from the model, time taken to run the request, tool call arguments, and more.

Tip: Keep the Debug tab open while you experiment — every new request streams in instantly.

Using vLLora with your existing AI Agents

vLLora is OpenAI-compatible, so you can point your existing agent frameworks (LangChain, CrewAI, Google ADK, custom apps, etc.) to vLLora without code changes beyond the base URL.

from langchain_openai import ChatOpenAI

from langchain_core.messages import HumanMessage

llm = ChatOpenAI(

base_url="http://localhost:9090/v1",

model="openai/gpt-4o-mini",

api_key="no_key",

temperature=0.2,

)

That's it. From here, you can debug your agents in real time by inspecting every call, tool invocation, and understand how your agents behave.

Enhanced Tracing with vLLora Python Package

For even deeper insights, we also provide the vLLora Python package that complements the OpenAI-compatible approach. This package offers enhanced tracing with framework-specific details like agent workflows, tool calls, and multi-step execution paths.

Example: OpenAI Agents SDK

Install the vLLora package with the OpenAI feature flag:

pip install 'vllora[openai]'

Then initialize vLLora before creating or running any OpenAI agents:

from vllora.openai import init

init()

# Then proceed with your normal OpenAI setup:

from openai import OpenAI

# ...define and run agents...

Once initialized, vLLora automatically captures all agent interactions, function calls, and streaming responses with full end-to-end tracing across your workflow.

Learn more: See our Working with Agent Frameworks guide for detailed integration instructions with OpenAI Agents SDK, Google ADK, and more frameworks.

Next steps

- Read the Quickstart to install and send your first trace: Quickstart

- Deeper integration with agent frameworks (optional): Working with Agent Frameworks

- Overview of the product and setup details: Introduction