Using vLLora with OpenAI Agents SDK

The OpenAI Agents SDK makes it easy to build agents with handoffs, streaming, and function calling. The hard part? Seeing what's actually happening when things don't work as expected.

Setup vLLora

First, install vLLora using Homebrew:

brew tap vllora/vllora

brew install vllora

vllora

Quick Setup

Route your OpenAI requests through vLLora by changing the base URL:

from openai import OpenAI

client = OpenAI(

api_key="no_key",

base_url="http://localhost:9090/v1"

)

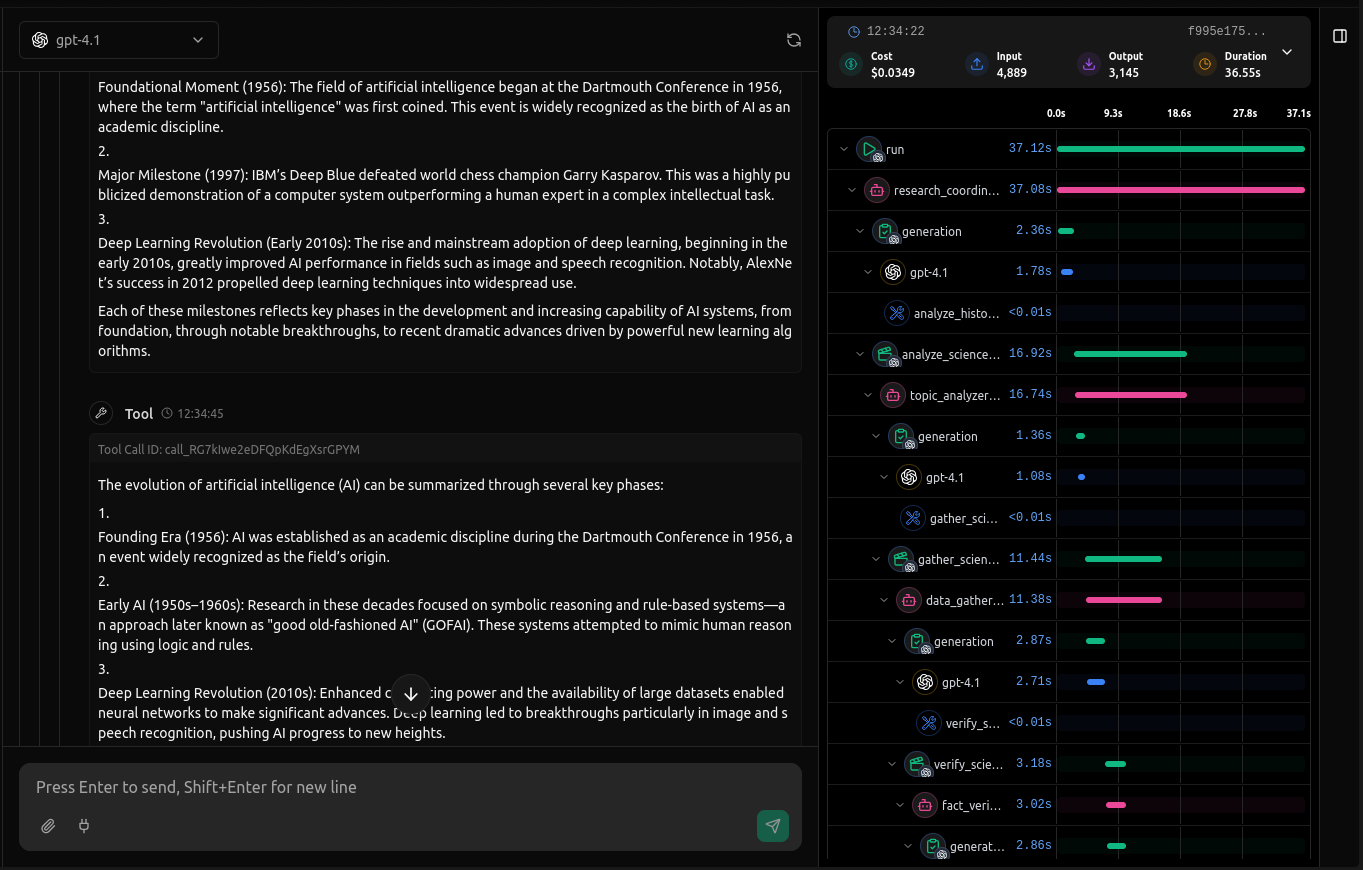

This gives you basic traces showing model calls, latencies, token usage, and function executions. You'll see what's being sent and received, but you're missing agent-specific context like handoffs, state transitions, and streaming details.

Full Agent Visibility

For complete tracing with agent state, handoffs, and streaming context, use the vLLora Python library:

pip install 'vllora[openai]'

Set your vLLora endpoint:

export VLLORA_API_BASE_URL=http://localhost:9090

Initialize vLLora before creating agents:

from vllora.openai import init

init()

# Now define your agents

from openai import OpenAI

# ...

vLLora automatically captures agent interactions, handoffs, function calls, and streaming responses. No client configuration needed—just initialize once and all your agent workflows are traced end-to-end.

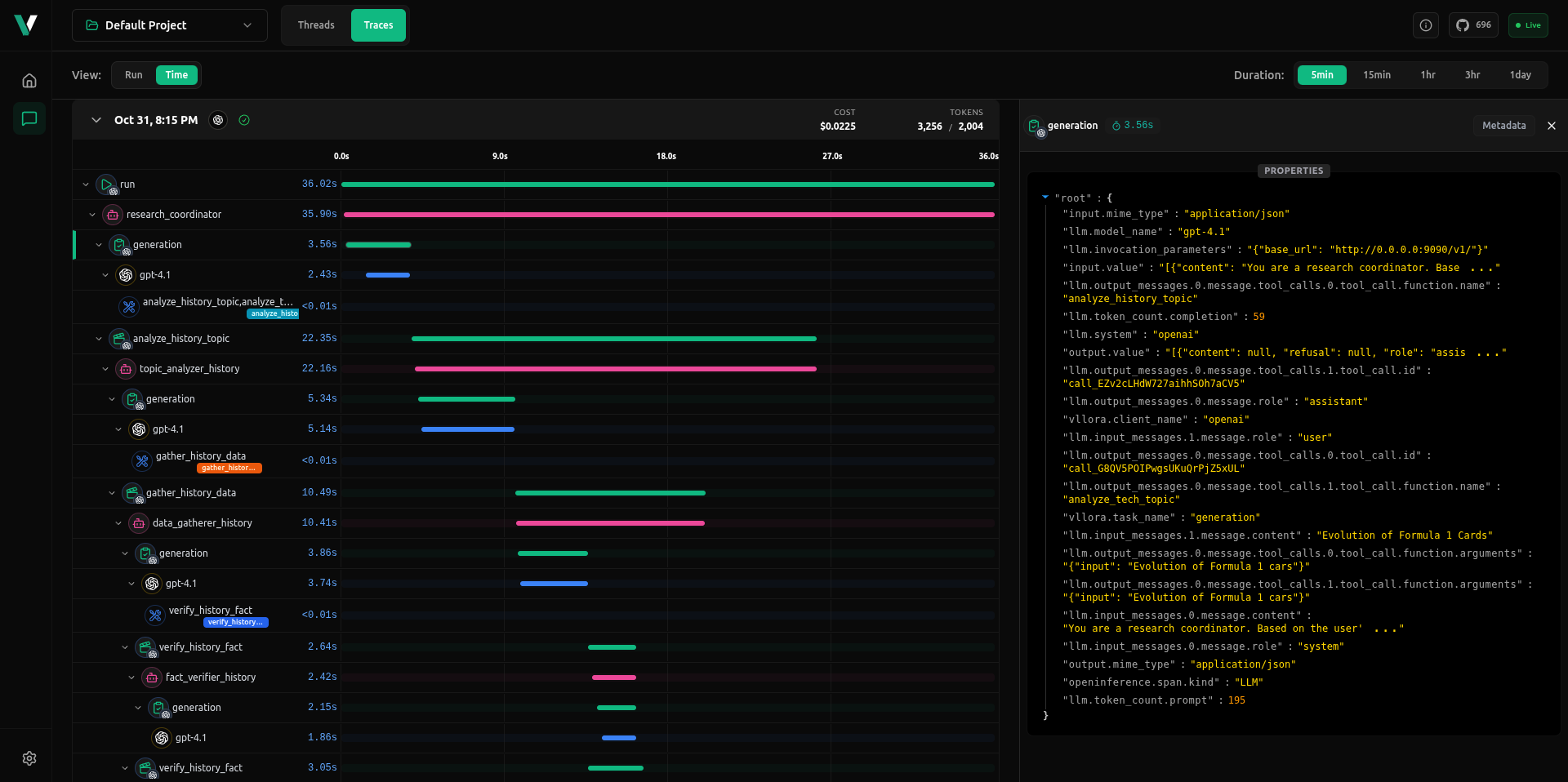

You'll see agent state transitions, handoff triggers, function inputs and outputs, and streaming chunks bundled into unified traces. Each trace shows the complete execution path with timing information, so you can spot bottlenecks and debug multi-agent workflows. When an agent hands off to another, when a function executes, or when streaming starts and stops—it's all visible in one place.

Next Steps

- Get started with vLLora: Quickstart Guide

- Learn about deeper integrations: Working with Agent Frameworks

- Explore the full documentation: Introduction